Hey there! This is the 4th post in my series on evaluating LLM-powered products, part of my ongoing effort to rediscover what data science means in the AI era. If you're interested in this topic, subscribe to get updates!

I’d love to hear your thoughts, experiences, or even disagreements. Drop a comment below, or reach out on LinkedIn. Let’s keep learning and figuring this out together!

What is Robustness?

Robustness in large language models (LLMs) refers to their ability to maintain reliable and accurate performance despite variations or perturbations in input, such as rephrased questions, typos, formatting changes, or adversarial prompts. Robustness is crucial because real-world users rarely provide perfectly phrased or sanitized inputs; queries naturally vary in wording and style.

A lack of robustness can lead to confusion, errors, or harmful outcomes, particularly in high-stakes domains such as education, healthcare, and legal assistance.

Types of Robustness Evaluations:

Adversarial Robustness: Resisting intentionally harmful or tricky prompts.

Input Variation Robustness: Handling natural variations like paraphrasing and typos.

Domain Robustness: Managing out-of-distribution or unfamiliar inputs.

This post will focus specifically on Input Variation Robustness. Adversarial and Domain Robustness will be covered in future posts.

General-purpose Evaluation Datasets

Robustness evaluation is well-researched, with several open-source datasets available. Prominent datasets include AdvGLUE and PAWS.

AdvGLUE Example

Original: "The movie was fantastic!"

Perturbed: "The film was fntstic!"

Label: Positive

PAWS Example

Sentence 1: "The cat sat on the mat."

Sentence 2: "On the mat sat the cat."

Label: Paraphrase

Limitations of General-purpose Datasets

General-purpose datasets are insufficient for two primary reasons:

Domain Relevance: As discussed in previous posts, testing LLM-powered products in their specific use-case context is essential. Generic datasets often fail to capture the nuances of the domain for which the LLM is designed. Qinyuan Ye et al., in their paper How Predictable Are Large Language Model Capabilities? A Case Study on BIG-bench, discuss how the predictability of benchmark performance underscores this limitation.

Benchmark Contamination: Recent studies, such as the paper by Chunyuan Deng et al., Investigating Data Contamination in Modern Benchmarks for Large Language Models, indicate a gap between inflated benchmark scores and actual LLM performance, partly due to data contamination—LLMs may inadvertently "cheat" by training on benchmark datasets. Thus, it is crucial to evaluate robustness using custom-curated datasets tailored to your specific use case.

Evaluating Robustness for Specific Use Cases

Methodology

Various methodologies exist in research for evaluating robustness. A practical approach I use is assessing worst-case performance:

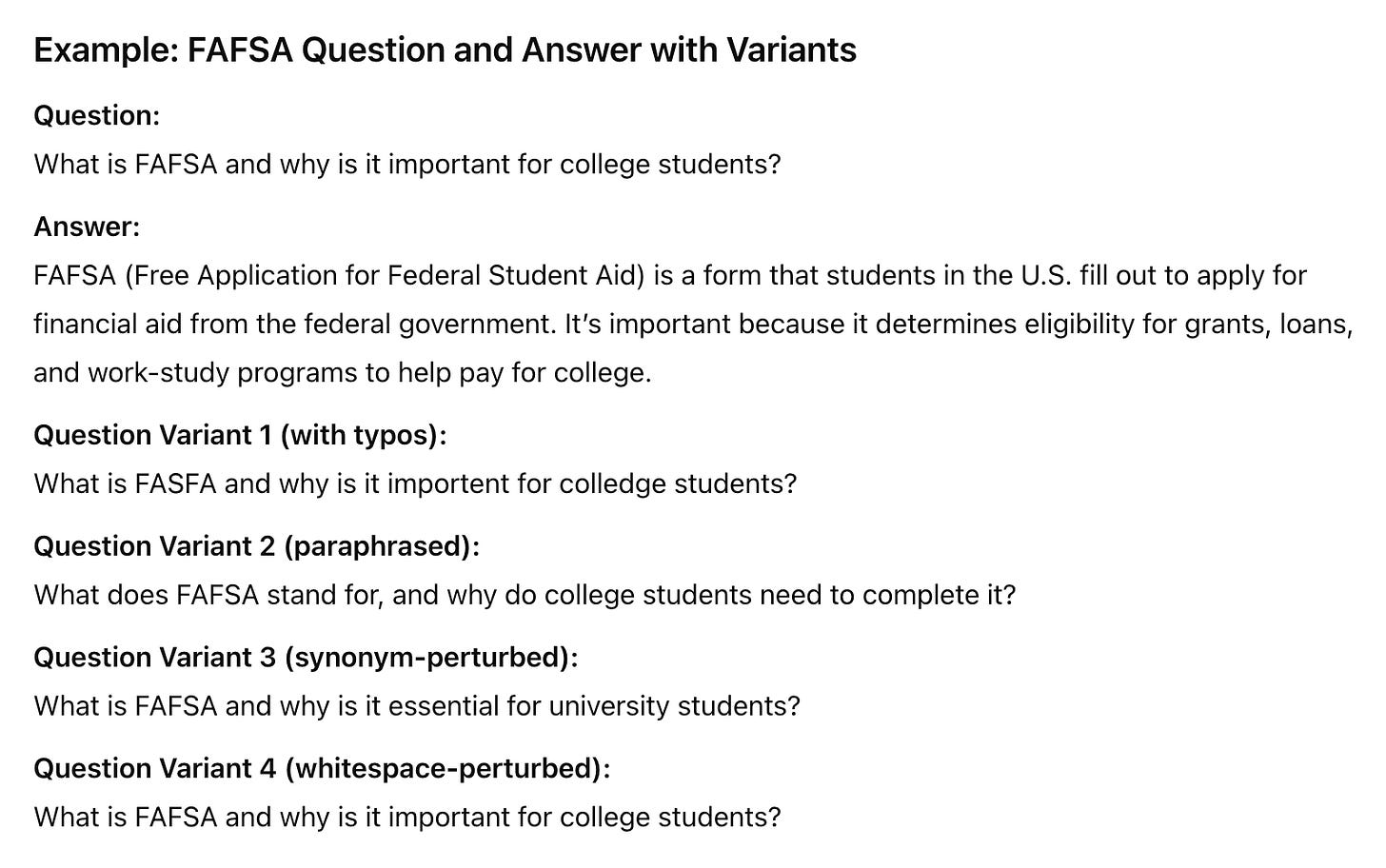

Step 1: Generate multiple variations of each question (e.g., V1, V2, V3, V4).

Step 2: Send each variation to the AI model.

Step 3: Evaluate AI responses. If responses are (Pass, Pass, Fail, Pass), the overall performance for this question is marked as "Fail" due to the worst-case outcome.

Step 4: Repeat this for every question in the test set.

The variations in Step 1 are generated using perturbation techniques. Perturbations can include typos, misspellings, synonym substitutions, or formatting changes. You can leverage your existing accuracy test set to create a robustness evaluation dataset.

Pseudo-Code for Dataset Creation

Here is a pseudo python code for generating robustness test dataset using the accuracy test set.

robustness_test_set = {}

for idx, (question, answer) in enumerate(accuracy_test_set):

robustness_test_set[idx] = [

typo_perturbation(question),

misspelling_perturbation(question),

synonym_perturbation(question),

formatting_perturbation(question)

]After generating the perturbed test set, run it through your LLM and evaluate responses using the "LLM-as-a-judge" methodology to measure robustness effectively.

Okay, that’s it for now on safety evaluation! I hope you found it useful. If you have thoughts, experiences, or critiques, I’d love to hear from you—let’s keep the conversation going and learn from each other. Thanks for reading and for being part of this evolving journey!

This series from Data Science x AI is free. If you find the posts valuable, becoming a paid subscriber is a lovely way to show support! ❤